|

Yehonathan Litman I'm a fourth year PhD student at in the Robotics Institute, Carnegie Mellon University, advised by Shubham Tulsiani and Fernando De la Torre. I have previously interned at Meta Reality Labs and was a visiting researcher at FAIR. I'm focusing on how large generative models can serve as priors for faithfully reconstructing the world by capturing its underlying intrinsic properties. My work is supported in part by an NSF Graduate Research Fellowship. I am always open to talking about research ideas or potential collaborations. Feel free to email me. Email / CV / Google Scholar / Twitter / Github |

|

ResearchSelected / All |

|

EditCtrl: Disentangled Local and Global Control for Real-Time Generative Video Editing

Yehonathan Litman, Shikun Liu, Dario Seyb, Nicholas Milef, Yang Zhou, Carl Marshall, Shubham Tulsiani, Caleb Leak CVPR, 2026 project page / arXiv / github An efficient video inpainting control framework that focuses computation only where it is needed, achieving 10x+ compute efficiency over state-of-the-art generative editing methods while maintaining or improving editing quality. |

|

|

Real-Time Neural Materials on Mobile VR

Zilin Xu, Yang Zhou, Yehonathan Litman, Matt Jen-Yuan Chiang, Lingqi Yan, Anton Michels EuroGraphics, 2026 project page A compact, coarse-to-fine neural material model using texture-space shading and spatiotemporal computation amortization, achieving over 90 FPS on Meta Quest 3 with visual quality comparable to NeuMIP. |

|

LightSwitch: Multi-view Relighting with Material-guided Diffusion

Yehonathan Litman, Fernando De la Torre, Shubham Tulsiani ICCV, 2025 project page / arXiv / github Efficiently relight any number of input images to a target lighting using inferred intrinsic property cues, extensible to 3D novel view relighting. |

|

|

MaterialFusion: Enhancing Inverse Rendering with Material Diffusion Priors

Yehonathan Litman, Or Patashnik, Kangle Deng, Aviral Agrawal, Rushikesh Zawar, Fernando De la Torre, Shubham Tulsiani 3DV, 2025 project page / arXiv / github Distill a material diffusion prior model into material parameters during inverse rendering to improve intrinsic decomposition and novel relighting. |

|

|

Global Visual‑Inertial Ground Vehicle State Estimation via Image Registration

Yehonathan Litman*, Daniel Mcgann*, Eric Dexheimer, Michael Kaess ICRA, 2022 video / IEEEXplore Reconstruct input images in a 3D occupancy grid and project to bird's eye view of the environment, register that to satelite imagery data to correct SLAM drift in real time without requiring GPS. |

|

|

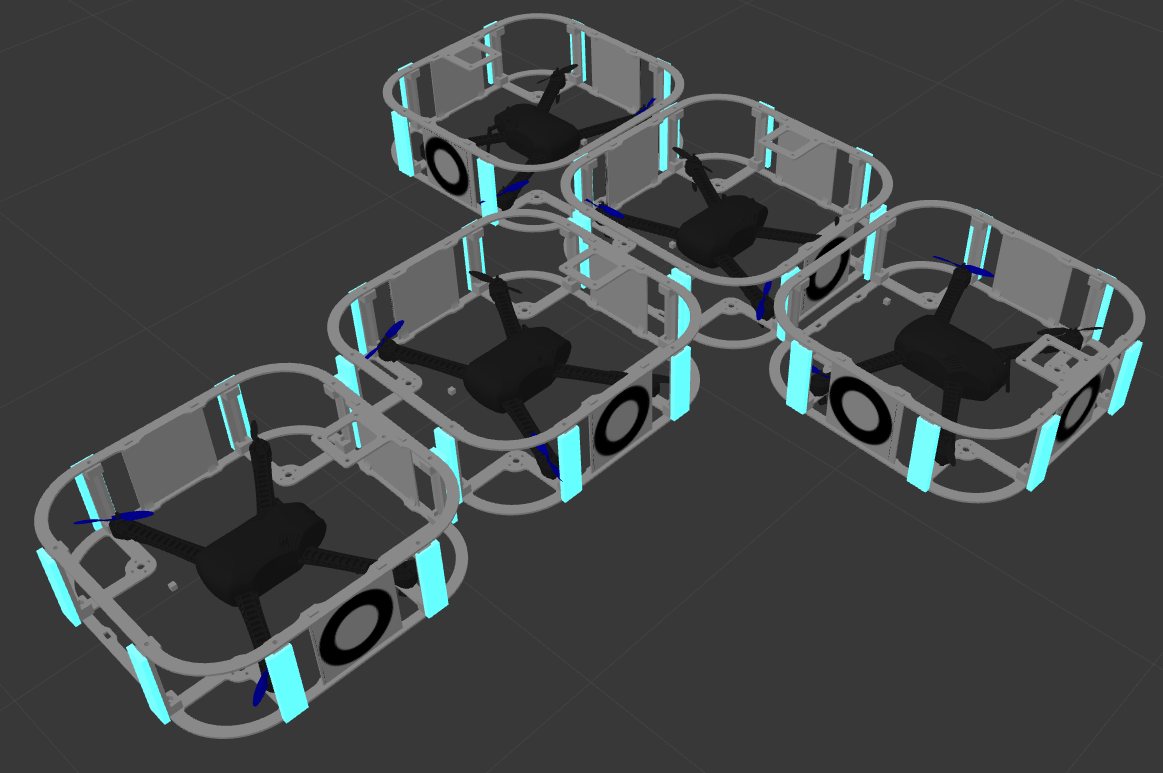

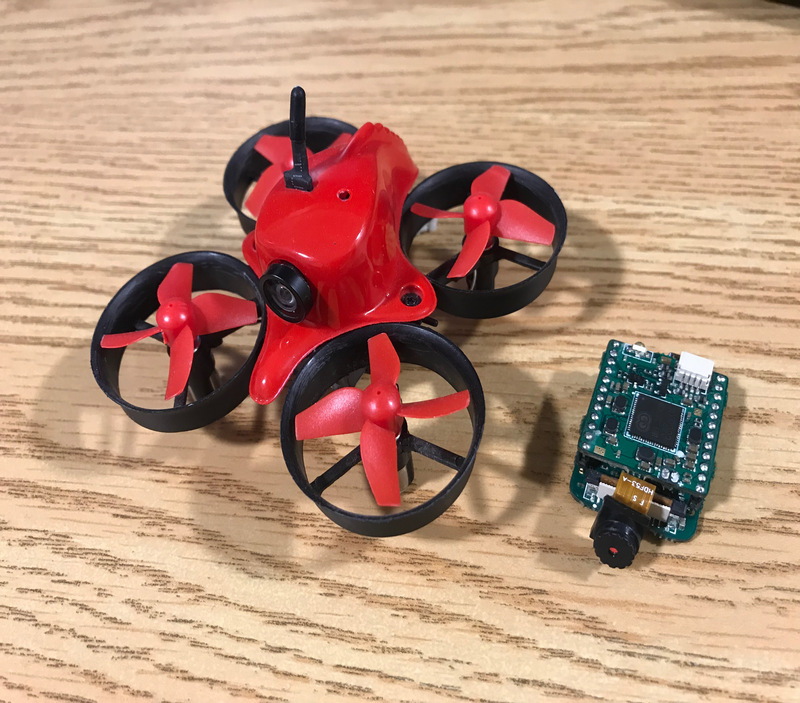

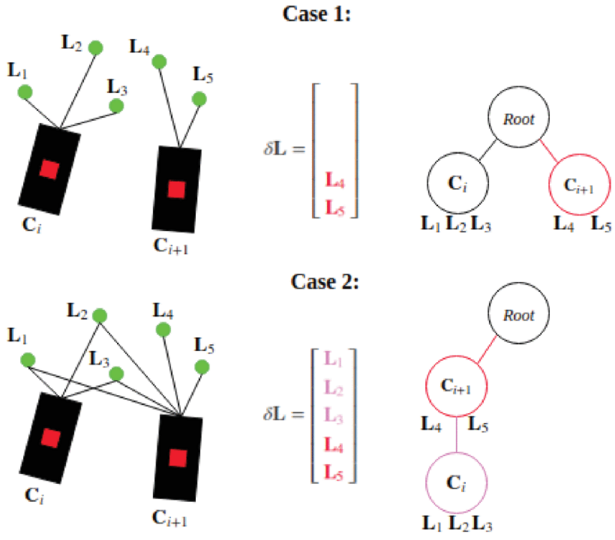

Vision‑Based Self‑Assembly for Modular Multirotor Structures

Yehonathan Litman, Neeraj Gandhi, Linh Thi Xuan Phan, David Saldaña IEEE RA-L & ICRA, 2021 (Finalist in Multi‑Robot Systems) video / IEEEXplore Dynamically assign a drone agent leader to heuristically assemble a large variety of drone swarm formations using only vision and onboard sensors. |

|

Accelerated Visual Inertial Navigation via Fragmented Structure Updates

Yehonathan Litman, Ya Wang, Ji Liu IROS, 2019 IEEEXplore Retain features across frames selectively based on shared information and use hardware acceleration to dramatically improve VIO SLAM efficiency. |

|

Invariant Filter Based Preintegration for Addressing the Visual Observability Problem

Yehonathan Litman, Ya Wang, Libo Wu IEEE MIT URTC, 2018 IEEEXplore Addressing the visual observability problem in VIO by using invariant filtering to improve efficiency and accuracy. |

Awards |

Misc. |

|

Save the Manatees! Even if you're not from Florida (or all the other places that have manatees), you can still help! |

|

Credits: Jon Barron. |